Tube Rank: Your Guide to Video Success

Discover tips and insights for optimizing your video presence.

Machine Learning: When Algorithms Go on a Power Trip

Discover the wild side of machine learning as algorithms take control! Are they helping us or causing chaos? Find out now!

Understanding Algorithmic Bias: When Machine Learning Models Misbehave

Understanding Algorithmic Bias is crucial in today's data-driven world, where machine learning models are increasingly being used to inform decisions across various sectors. Algorithmic bias occurs when these models produce results that are systematically prejudiced due to incorrect or unbalanced data inputs. For example, if a machine learning model is trained on historical data reflecting existing societal biases, it may inadvertently perpetuate these biases, leading to discriminatory outcomes. Understanding the sources and impacts of this bias is essential for developers, businesses, and policymakers to create fair and inclusive systems that benefit all users.

There are several factors that contribute to algorithmic bias, including sampling bias, where the data used to train the model does not accurately represent the intended population, and labeling bias, where subjective interpretations of data labels can skew results. Additionally, the lack of transparency in machine learning algorithms makes it challenging to identify and mitigate these biases effectively. To address these issues, organizations must adopt comprehensive strategies such as diverse data collection, continuous model evaluation, and incorporating ethical considerations into the design of their systems. By doing so, we can ensure that machine learning technologies serve to enhance fairness rather than entrench existing prejudices.

The Ethics of AI: Ensuring Responsible Machine Learning Practices

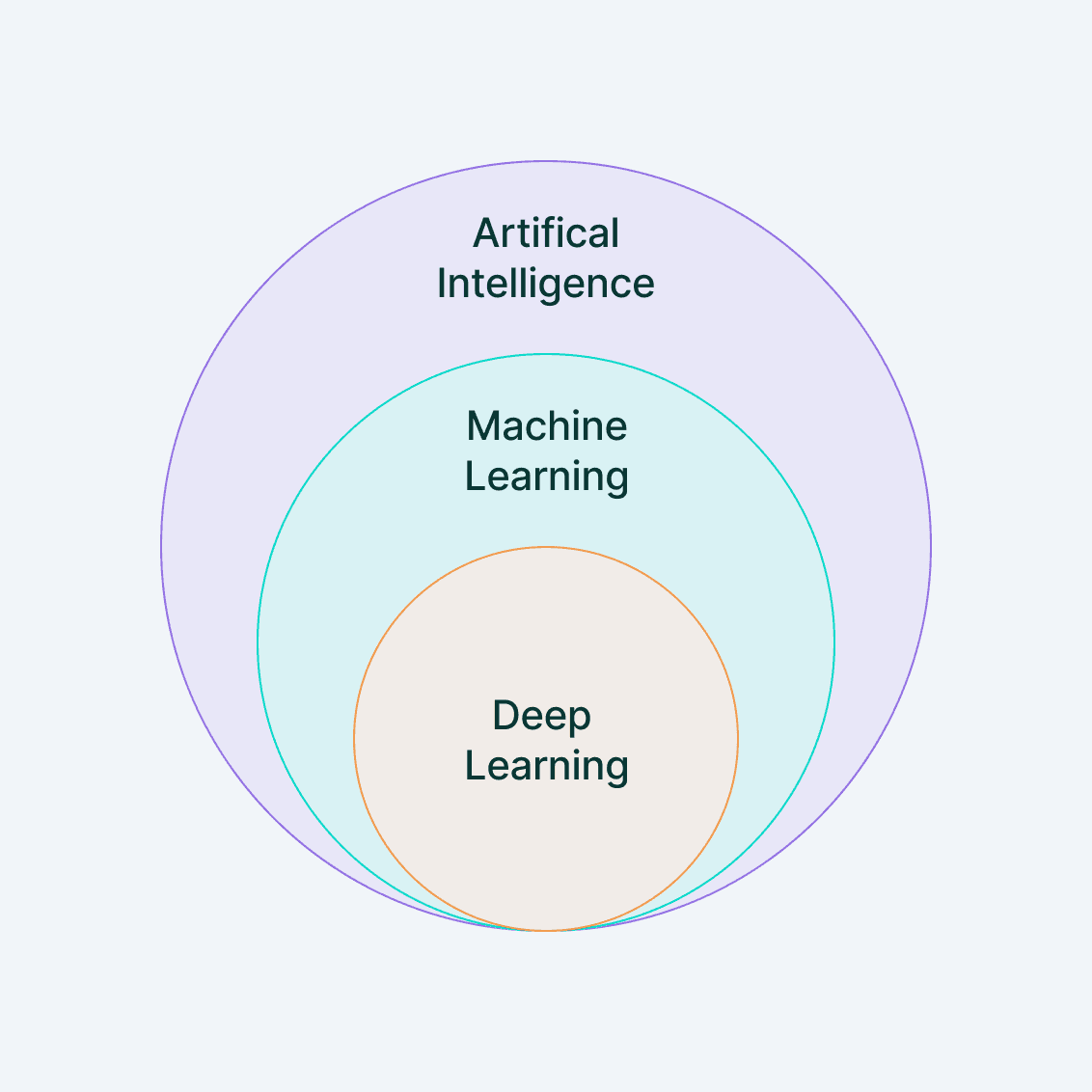

The rise of Artificial Intelligence (AI) has transformed various sectors, but it also brings forth significant ethical challenges. As machine learning algorithms become more powerful and autonomous, it is imperative to ensure that their development and deployment adhere to responsible practices. Ethical considerations include fairness, accountability, and transparency in AI systems. Developers must prioritize unbiased data collection and representation to avoid reinforcing existing societal inequalities.

To cultivate a culture of responsible machine learning, organizations should implement comprehensive guidelines and training programs that emphasize ethical standards. Establishing an ethical framework can involve creating diverse teams that bring varied perspectives to the table, as well as conducting regular audits of AI systems to ensure compliance with ethical norms. As society continues to integrate AI into everyday life, upholding ethical practices will be crucial in fostering public trust and ensuring that technology serves humanity responsibly.

Are We Losing Control? The Dangers of Autonomous Algorithms

As we increasingly integrate technology into our daily lives, the rise of autonomous algorithms has sparked a critical debate: Are we losing control? These algorithms, powered by artificial intelligence, can analyze vast amounts of data and make decisions faster than any human could. However, this speed comes with inherent risks, from biased outcomes to unforeseen consequences. For instance, the decisions made by these algorithms can affect everything from credit scores to law enforcement, often without human oversight. As we relinquish control to machine-generated decisions, we must ask ourselves: are we truly prepared for the dangers of autonomous algorithms?

The situation is further complicated by the lack of transparency in many algorithmic processes. Traditional accountability mechanisms struggle to keep pace with rapid advancements in AI technology, making it difficult for consumers and regulators to understand how these systems function. Accordingly, this obscurity can lead to mistrust and fear. To mitigate these fears and regain a semblance of control, we need to enhance algorithm transparency through strict regulations and ethical guidelines that ensure these technologies are developed responsibly. Only then can we harness the benefits of autonomous algorithms without succumbing to their potential dangers.